Scary ‘Fix Index Coverage Issues’ mail from Google

With a motive to help webmaster to get explicit information and activity reports of their content indexing, Google recently came up with a new Beta search console reports. Soon after its release, I got a haunting e-mail from the Google which made me realize that something is not okay with my website and it could affect my SEO ranking. I opened my search console and found the following message, stating Index coverage issues found on my website:

I immediately googled the issue and found that many other people like me are facing the same issue and getting similar e-mails from Google. I took a sigh of relief and at the same moment got worried as I don’t want my website to suffer because of violation of some Google rules. The immediate question that hit my head after reading the e-mail was what is index coverage issue and how to resolve the issue?

Read Also: Google to Index HTTPS Web Pages over HTTP

What are Index Coverage Issues?

Index coverage

is a report with Google Search Console that took birth in early August 2017. This report helps to identify that how many pages of a site are getting indexed, as well as how many pages that are not getting indexed because of some errors. Such issues mainly highlight problems within your XML sitemaps, potential 404 URLs, and other indexation problems.

If there are errors on your page that do not allow Google to index it, it will drop index coverage report in your e-mail and will also offer tips to resolve the issues. Google also provides privilege to export data to make use of it in offline mode.

How to fix New Index Coverage Issues?

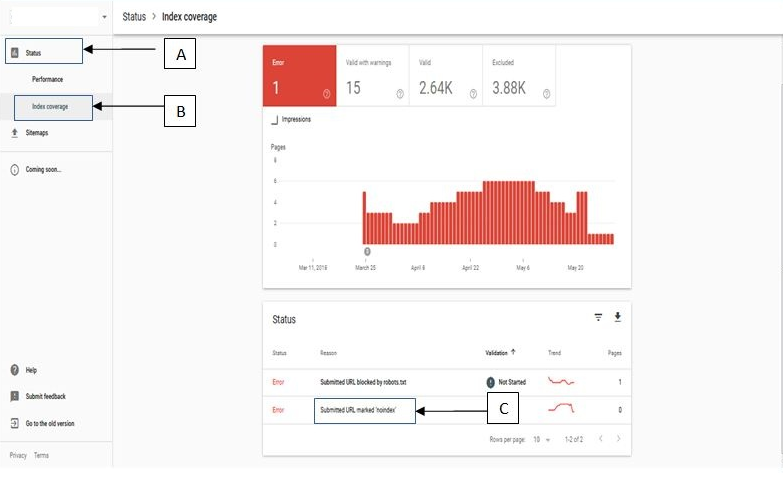

- To fix the issue, first, log in to your webmaster tool.

- Click on the status (A).

- Then click on Index Coverage (B).

And then, lastly click on submitted URL marked ‘noindex’ (C).

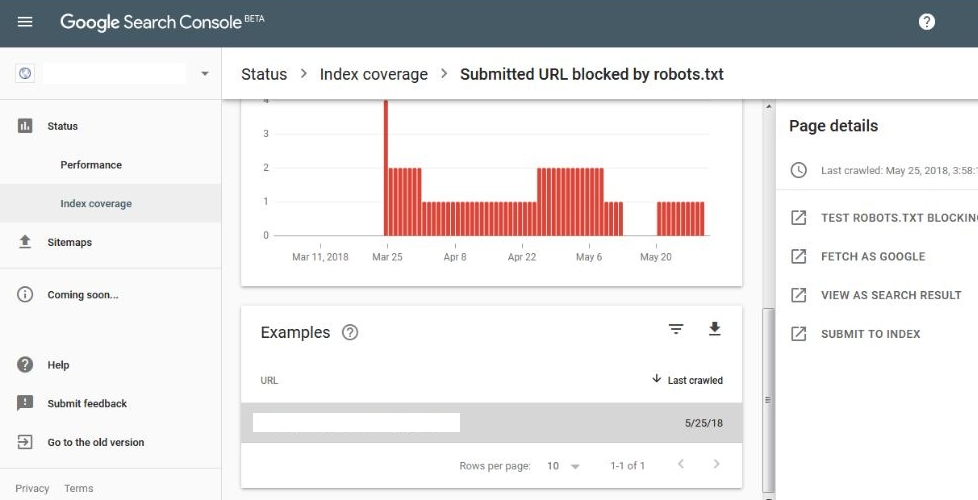

2) At this step, 4 ways will be displayed as provided by Google to fix the issue. They are as follows and can be seen in the screenshot too:

2) At this step, 4 ways will be displayed as provided by Google to fix the issue. They are as follows and can be seen in the screenshot too:

- Test Robots.txt Blocking;

- Fetch as Google;

- View as Search Result and

- Submit to Index

3) Your issue may be because of the following. Identify the issue and resolve it:

- Your Robots.txt file is not allowing Google from accessing pages on your site.

- Pages are not getting fetch using Google Bots.

- It’s not getting indexed in the search engine because of incorrect Meta robots tags “noindex, nofollow” or noindex, follow” for particular pages.

- Your sitemap is filled with inaccuracies which require your attention. Check whether your sitemap is incorrect and resubmit it.

- Once you’re confident that the problem is detected and changes have been made, you can then use the tool’s functionality to resubmit the URLs to Google for approval.

‘Catch’ in the last point…

When I encountered the ‘Index coverage issues’, I successfully detected the problem, rectified it, verified it and then re-submitted it to Google. To my surprise, another e-mail from Google regarding ‘Fix index coverage issues’ was waiting for me in the inbox. The e-mail took me by shock because I was confident enough that I have rectified the issue and was waiting for the page to get indexed. I am still confused whether it is a bug from Google’s end or some problem with my website.

Is there anyone who suffered the same issue?